Cory LaNou

Building a Production-Ready SEO Validator in 4 Hours

Overview

As a senior developer with 20+ years in the trenches, I built a fully functional, production-ready SEO validation system in under 4 hours using AI assistance. Ten years ago, this would have taken me weeks. But here's the key insight: without being a senior level developer, AI would have never gotten this to work. This is the story of how AI amplifies expertise rather than replacing it, complete with real metrics, mistakes made, and lessons learned.

Target Audience

This article is aimed at senior developers, technical leads, and CTOs who want to understand the real-world impact of AI-assisted development, as well as developers curious about building production systems with Go, HTMX, and modern web technologies.

How AI Supercharged My Development: From Weeks to Hours

As a senior developer with 20+ years in the trenches, I've witnessed the evolution of our craft from the early days of web development to cloud computing. In the late 90's, I wrote one of the world's first and leading ecommerce engines at cdw.com (amazon was barely a thing back then). But nothing prepared me for the productivity leap I experienced recently when building a comprehensive SEO validation platform for my family's businesses—and for my 17-year-old entrepreneur son, Logan.

The result? A fully functional, production-ready system in under 4 hours. Ten years ago, this would have taken me weeks.

The Challenge: Real Business Needs

Logan runs several online businesses, and understanding SEO performance across multiple domains was becoming critical. We needed a tool that could:

- Crawl multiple websites simultaneously with thousands of pages

- Analyze SEO metrics comprehensively (meta tags, Open Graph, Schema.org, Twitter Cards)

- Provide real-time dashboards with actionable insights

- Support multi-user organizations with role-based access

- Scale efficiently without breaking the bank

The Tech Stack: Modern Go Architecture

I chose Go as the foundation for its legendary concurrency model—perfect for web crawling at scale. Here's what we built:

Backend Architecture

- Go 1.24 with Echo framework for HTTP handling

- SQLite with SQLC for type-safe database operations

- Concurrent crawling engine using Go routines and channels

- Google OAuth 2.0 for authentication

- Automated database migrations with Goose

Frontend & UI

- Templ for type-safe HTML templating (compile-time validation)

- Tailwind CSS for utility-first styling

- HTMX for dynamic interactions without heavy JavaScript

- Alpine.js for lightweight interactivity

- Responsive design that works on all devices

Crawling & Analysis Engine

- Configurable concurrent crawling (default: 200 pages, 5 concurrent requests)

- Comprehensive SEO analysis: Title, description, keywords, meta tags, charset, viewport

- Open Graph validation with image accessibility checking

- Schema.org Product markup detection and validation

- Twitter Card support

- Real-time status tracking with animated indicators

Data Architecture

- SQLite for lightweight, zero-configuration database

- Proper foreign key constraints with cascading deletes

- Bulk operations for efficient URL insertion

- Indexed queries for fast lookups

- Migration-based schema evolution

Deployment: Enterprise Features at Startup Costs

The entire platform is deployed on a Digital Ocean droplet, hosting this and several other sites for a whopping $6/month in total hosting fees—effectively at no added cost since I already host other sites on the same server. You can also run it locally at zero cost if you prefer.

Development Tools: The AI Editor Experience

For this project, I used Cursor, the AI-powered code editor. My experience with it was revealing:

Agent Mode vs Manual Control

I started using Cursor mostly in agent mode, but quickly found that auto-completions kept making bad decisions. The turning point came when I switched to using Claude Sonnet 4 exclusively—the accuracy improved significantly. Yes, I burned through credits faster, but still stayed well within my basic $20/month plan.

The Screenshot Feedback Loop

Here's a workflow tip that saved me hours: When the AI got templates wrong (which happened frequently), I'd take screenshots of the rendered output and feed them back into the chat. "Here's what you generated, and here's why it's broken." Visual feedback was often more effective than trying to describe the problem in words.

The HTMX Challenge

If there was one consistent pain point, it was HTMX. The AI consistently struggled with:

- Wrong target attributes - causing content to replace the wrong elements

- Missing hx-swap directives - leading to broken layouts

- Incorrect hx-trigger configurations - buttons that didn't work as expected

- Page layout corruption - clicking a button and suddenly your entire layout disappears

Most of my development time was probably spent fixing HTMX code. The AI never quite caught on to the patterns, even after dozens of corrections. It's a reminder that while AI can accelerate development, some technologies still require that human touch to get right.

The AI-Assisted Development Experience

Let me be abundantly clear: without being a senior level developer, AI would have never gotten this to work. AI works when you are already a master of your craft, and give it clear goals, objectives, and instructions on what technology to use and how to use it.

What AI Got Right

The AI assistant excelled at:

- Architectural decisions: Suggesting proper separation of concerns

- Database schema design: Creating normalized tables with proper relationships

- Concurrent programming patterns: Implementing Go's channels and goroutines correctly

- Error handling: Comprehensive error capture and user-friendly messages

- Testing: Generating thorough test suites with edge cases

What AI Got Wrong (And Why That's Still Valuable)

The AI made numerous mistakes:

- Template syntax errors that required manual fixes

- Database query optimization that needed refinement

- Authentication flow edge cases that weren't initially handled

- CSS styling inconsistencies across different components

- Configuration management that was overly complex initially

But here's the key insight: Even with these mistakes, the AI provided a solid foundation that I could iterate on rapidly. Instead of starting from scratch, I spent my time refining and perfecting rather than architecting and scaffolding.

Performance: The Go Advantage

The performance results speak for themselves:

// Concurrent crawling configuration

type CrawlerConfig struct {

MaxPages int // 200 pages default

MaxConcurrent int // 5 concurrent requests

Timeout time.Duration // 10 minutes total

RequestTimeout time.Duration // 30 seconds per request

MaxDepth int // 15 levels deep

FollowExternalLinks bool // Internal links only

PerformSEOAnalysis bool // Real-time analysis

}

Real-world performance:

- Crawls 200+ pages in 1-2 seconds on average sites

- Concurrent SEO analysis during crawling (no double-fetching)

- Real-time status updates with animated progress indicators

- Background processing that doesn't block the UI

This is where Go's concurrency model truly shines. Each URL is processed in its own goroutine, with careful coordination using channels and semaphores to prevent overwhelming target servers.

Advanced Features: Production-Ready from Day One

Multi-Tenant Architecture

// Organization-based access control

type Organization struct {

ID int64

Name string

Slug string

Description string

CreatedAt time.Time

UpdatedAt time.Time

}

// Role-based permissions

const (

RoleSuperAdmin = "super_admin"

RoleAdmin = "admin"

RoleMember = "member"

RoleViewer = "viewer"

)

Comprehensive SEO Analysis

The system analyzes:

- Basic SEO: Title, description, keywords, author, charset

- Open Graph: Full tag validation with image accessibility checking

- Schema.org: Product markup detection and validation

- Twitter Cards: Complete metadata analysis

- Technical SEO: Canonical URLs, robots meta, viewport settings

Real-Time Crawling Status

// Live status tracking

type CrawlStatus struct {

Status string // "pending", "crawling", "completed", "failed"

Started *time.Time

Completed *time.Time

URLsFound int64

ErrorCount int

WarningCount int

}

The Business Impact

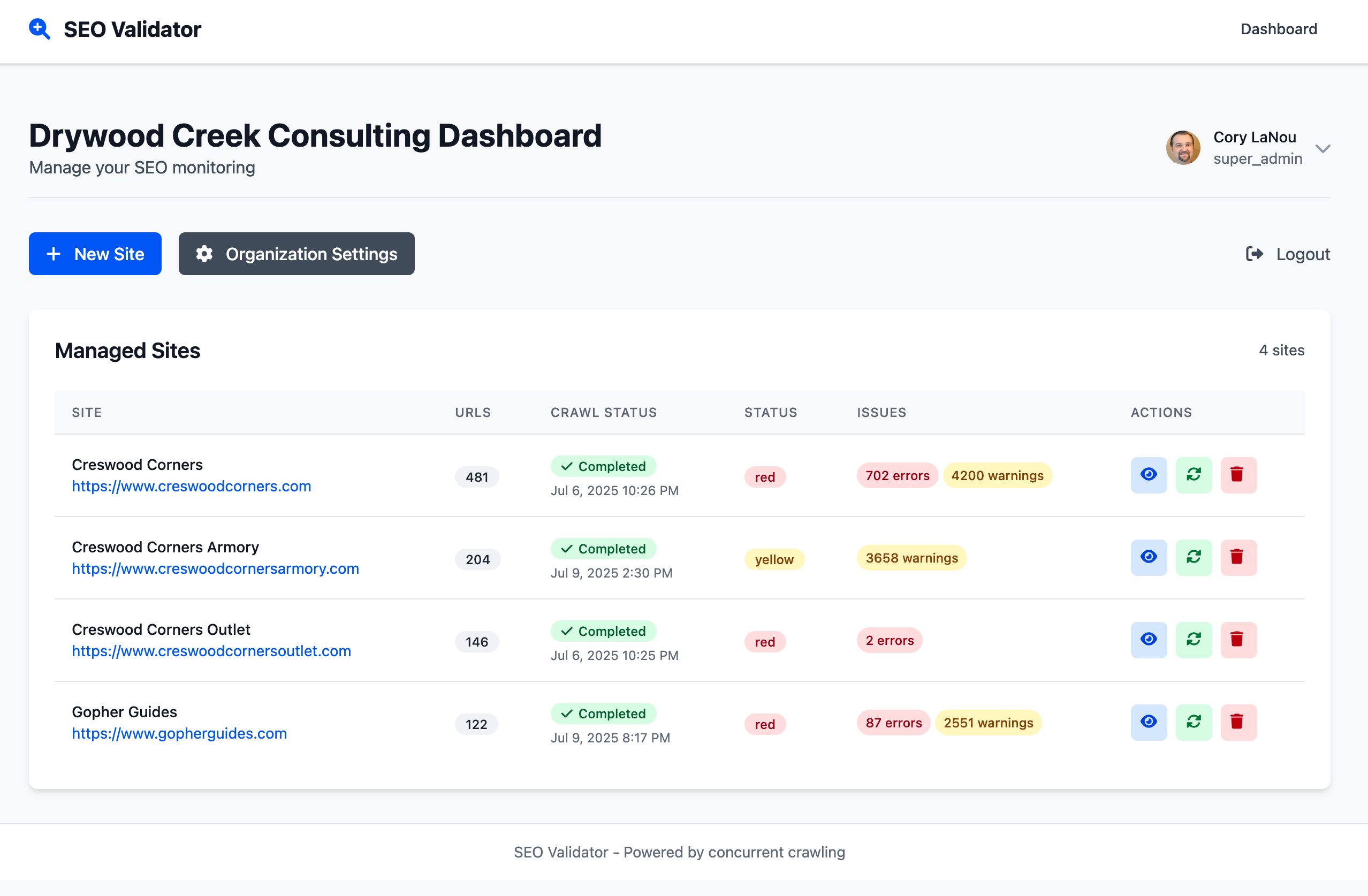

The tool has already proven invaluable for Logan's businesses:

Immediate Insights

- 4 sites monitored across different business verticals

- Only 1 site in excellent SEO health (eye-opening!)

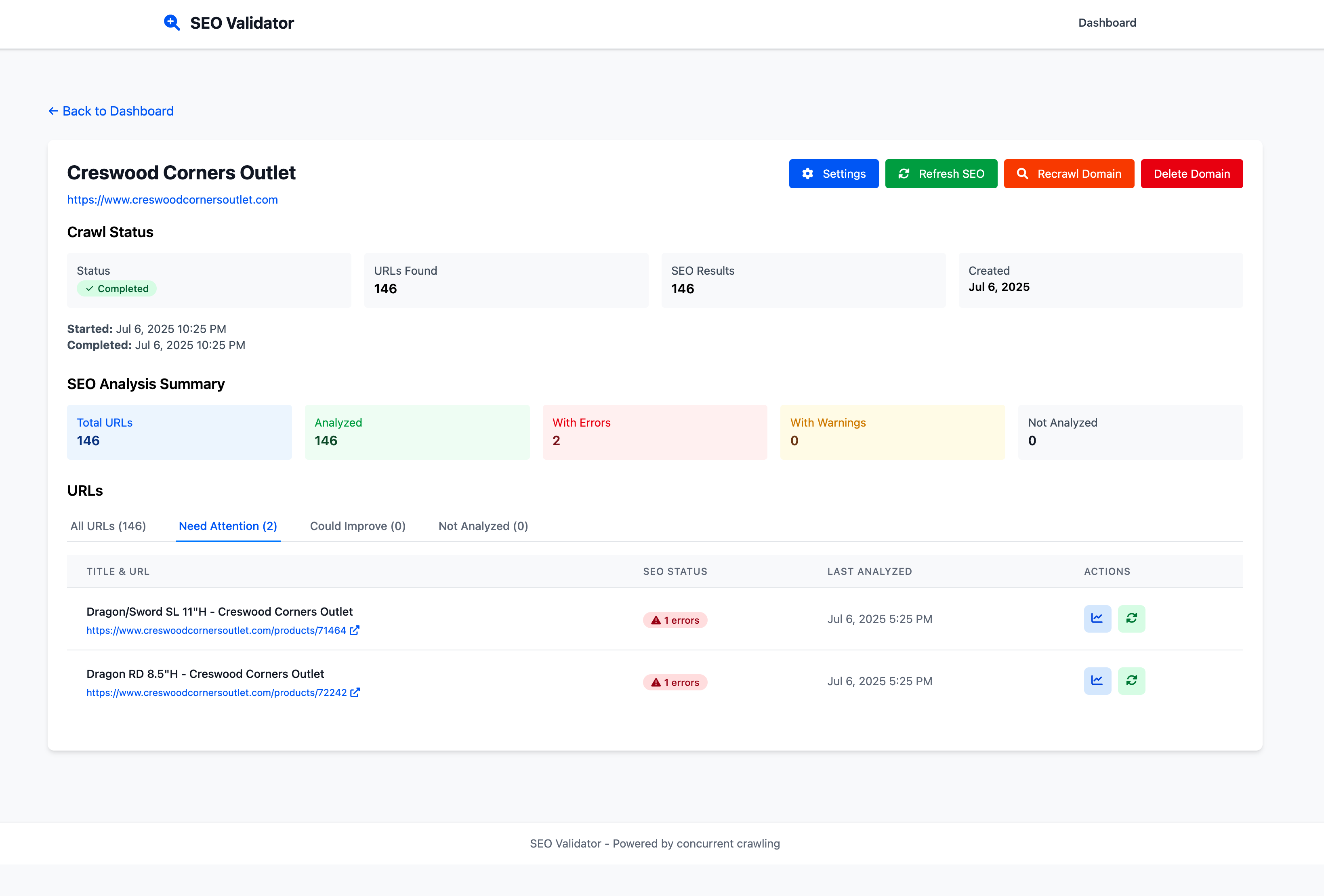

- Most issues are simple fixes: missing charset definitions, meta descriptions

- Actionable guidance for each identified problem

Educational Value

For Logan, this tool is a masterclass in SEO:

- Visual feedback on what good SEO looks like

- Clear prioritization of errors vs. warnings

- Real-time validation when he makes improvements

- Understanding of technical SEO beyond just keywords

Code Quality: Enterprise Standards

Despite the rapid development, we maintained high standards:

Comprehensive Testing

func TestCrawler_BasicFunctionality(t *testing.T) {

// Test server setup

server := httptest.NewServer(/* ... */)

defer server.Close()

// Crawler configuration

config := CrawlerConfig{

MaxPages: 10,

MaxConcurrent: 2,

Timeout: 30 * time.Second,

}

// Test execution and validation

results, err := crawler.Crawl(ctx)

// ... comprehensive assertions

}

Type Safety

Using SQLC for database operations ensures compile-time safety:

// Generated type-safe database operations

func (q *Queries) CreateDomain(ctx context.Context, arg CreateDomainParams) (Domain, error) {

// Auto-generated, type-safe SQL execution

}

Configuration Management

Environment-based configuration with sensible defaults:

type Config struct {

Server ServerConfig

Crawler CrawlerConfig

Database DatabaseConfig

Auth AuthConfig

}

Lessons Learned: The Future of Development

AI as a Force Multiplier

AI coding assistants aren't replacing developers—they're amplifying our capabilities. The key is understanding their strengths and limitations:

Use AI for:

- Initial architecture and scaffolding

- Boilerplate code generation

- Test case creation

- Documentation writing

- Pattern implementation

Human expertise remains critical for:

- Business logic validation

- Performance optimization

- Security considerations

- User experience design

- System integration

The Iteration Advantage

With AI handling the heavy lifting of initial implementation, I could focus on:

- Rapid prototyping of features

- Real-time testing with actual data

- User experience refinement

- Performance optimization

- Security hardening

Technical Deep Dive: Key Implementation Details

Concurrent Crawling Engine

// Semaphore-based concurrency control

type Crawler struct {

config CrawlerConfig

baseURL *url.URL

visited map[string]bool

visitedMu sync.RWMutex

results []CrawlResult

resultsMu sync.Mutex

semaphore chan struct{}

client *http.Client

}

func (c *Crawler) crawlURL(ctx context.Context, targetURL string, depth int) CrawlResult {

// Concurrent URL processing with proper synchronization

c.semaphore <- struct{}{}

defer func() { <-c.semaphore }()

// SEO analysis during crawling

if c.config.PerformSEOAnalysis {

seoResult, err := AnalyzeSEOFromDocument(targetURL, doc)

// Handle results...

}

}

Database Schema Evolution

-- Migration-based schema management

CREATE TABLE domains (

id INTEGER PRIMARY KEY AUTOINCREMENT,

name TEXT NOT NULL UNIQUE,

base_url TEXT NOT NULL,

organization_id INTEGER,

crawl_status TEXT DEFAULT 'pending',

crawl_started_at DATETIME,

crawl_completed_at DATETIME,

FOREIGN KEY (organization_id) REFERENCES organizations(id)

);

Authentication Flow

// Google OAuth with session management

func (a *AuthService) ExchangeCodeForUser(ctx context.Context, code string) (*types.User, error) {

token, err := a.oauthConfig.Exchange(ctx, code)

if err != nil {

return nil, fmt.Errorf("failed to exchange code for token: %w", err)

}

// Get user info and create/update database record

userInfo, err := a.getUserInfo(ctx, token)

// ... implementation

}

The Bottom Line: ROI of AI-Assisted Development

Time Investment: 4 hours Traditional Estimate: 2-3 weeks Time Saved: 95%+

What This Means:

- Faster time-to-market for business-critical tools

- More time for refinement and user experience

- Ability to experiment with complex features

- Reduced development risk through rapid prototyping

Looking Forward: The New Development Paradigm

This project represents a fundamental shift in how we approach software development:

- AI handles the boilerplate → Developers focus on business logic

- Rapid iteration cycles → Faster validation of ideas

- Higher quality baselines → More time for optimization

- Complex features become accessible → Smaller teams can build more

For Logan's businesses, this tool provides ongoing value through:

- Automated SEO monitoring across all his sites

- Clear action items for improvement

- Performance tracking over time

- Scalable architecture as his businesses grow

Conclusion: The Human-AI Partnership

The future of software development isn't about AI replacing developers—it's about amplifying human creativity and expertise. This project proved that with the right AI assistant, a senior developer can build production-ready systems in hours rather than weeks.

The key is maintaining the critical thinking, business acumen, and technical judgment that only comes from years of experience, while leveraging AI to handle the mechanical aspects of implementation.

For fellow developers: Embrace these tools, but remember that your expertise in system design, user experience, and business logic remains irreplaceable. AI is a powerful ally when you're already a master of your craft.

For business leaders: The development velocity gains are real, but the human element—understanding your users, making architectural decisions, and ensuring quality—remains critical.

The SEO Validator is now helping Logan optimize his online businesses, providing real-time insights that would have taken weeks to build just a few years ago. And that's the real victory: technology serving business needs at the speed of thought.

While this code might not be perfect and might need a few updates to be enterprise-ready, it's by far a great tool and not something I intend to sell, but use—and for that, it more than hit the mark. I do plan to open source this as well, so if anyone wants to run it locally or deploy it for their own needs, that's fine (you just can't sell it or use it to make money, but corporate free use is fine as well… license incoming 😊).

Meta: This Article Was Also AI-Assisted

In the spirit of full transparency, I used AI to develop this article itself. The process perfectly mirrors the development experience I've been describing:

I started in Cursor with this prompt:

"I want to write a linked in blog about this project. Talk about the tech stack. Mention that as a senior dev of 20+ years, I was easily able to create a very complex system, including oauth (google), web crawling, seo validation, etc. in under 4 hours. This task, 10 years ago, would have been weeks of effort. Now, I was able to tell it VERY specifically how I wanted the project structured, exactly how I wanted the technology used, and it still got SO much wrong, But after 4 hours of me telling it what it did wrong it was still by far the best choice vs me starting this from scratch. The project is absolutely amazing, as most of the sites I'm running as side projects are for my families business (and my 17 year old entrepreneur son Logan). These tools help him understand his page ranking, what he needs to fix on his services etc. As you'll see from the dashboard, only one site is in really good shape. However, most of the errors are simple like "missing character set" defined, etc. So he doesn't have a lot of work in front of him, but this tool will easily guide him. Also, because it's written in go, it crawls and validates thousands of pages in 1-2 seconds. Gotta love Go's concurrency model."

I then took the output, put it in a gist, and fed it to Claude Opus 4 (which excels at deep thinking and article writing like this).

After a dozen prompts to correct things it got wrong and add a few more sections (like this one you're reading right now), the article was ready. It was written in my voice—I have it read my other blog articles before writing anything for me—and then I was done.

The result? Something that would have taken 4-6 hours to write was completed in 20 minutes (most of it spent proofreading). Just like the SEO validator itself, the AI didn't get everything right on the first try, but the acceleration in productivity was undeniable.

Want More?

If you've enjoyed reading this article, you may find these related articles interesting as well: